@markdown

# TensorFlow MNIST 데이터 셋 이용한 손 글씨 인식 학습모델 구현

<br/>

## MNIST 손글씨 인식 모델 기본 소스코드

____

<pre><code class="python" style="font-size:15px">import tensorflow as tf

import random

import matplotlib.pyplot as plt

from tensorflow.examples.tutorials.mnist import input_data

# one_hot encoding 옵션

mnist = input_data.read_data_sets("MNIST_data/", one_hot=True)

# 0~9까지 숫자 10개

nb_classes = 10

X = tf.placeholder(tf.float32, [None, 784])

Y = tf.placeholder(tf.float32, [None, nb_classes])

W = tf.Variable(tf.random_normal([784, nb_classes]))

b = tf.Variable(tf.random_normal([nb_classes]))

hypothesis = tf.nn.softmax(tf.matmul(X, W) + b)

cost = tf.reduce_mean(-tf.reduce_sum(Y * tf.log(hypothesis), axis=1))

optimizer = tf.train.GradientDescentOptimizer(learning_rate=0.1).minimize(cost)

is_correct = tf.equal(tf.arg_max(hypothesis, 1), tf.arg_max(Y, 1))

accuracy = tf.reduce_mean(tf.cast(is_correct, tf.float32))

training_epochs = 20 # 전체 데이터셋 학습시킬 횟수

batch_size = 100 # 한번에 실행시킬 데이터 갯수

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

for epoch in range(training_epochs):

avg_cost = 0

total_batch = int(mnist.train.num_examples / batch_size)

for i in range(total_batch):

batch_xs, batch_ys = mnist.train.next_batch(batch_size)

c, _ = sess.run([cost, optimizer], feed_dict={X:batch_xs, Y:batch_ys})

avg_cost += c / total_batch

print('Epoch:', '%04d' % (epoch + 1), '| cost =', '{:.9f}'.format(avg_cost))

print("---------------------------------------------------------------------")

print("Learning finished")

print("Accuracy : ", accuracy.eval(session=sess, feed_dict={X: mnist.test.images, Y: mnist.test.labels}))

for epoch in range(5):

r = random.randint(0, mnist.test.num_examples - 1)

# print("Test Random Label: ", sess.run(tf.argmax(mnist.test.labels[r:r + 1], 1)))

print("\nTest Image -> ", sess.run(tf.argmax(mnist.test.labels[r:r + 1], 1)))

plt.imshow(mnist.test.images[r:r + 1].reshape(28, 28), cmap='Greys', interpolation='nearest')

plt.show()

print("Prediction: ", sess.run(tf.argmax(hypothesis, 1), feed_dict={X: mnist.test.images[r:r + 1]}))

</code></pre>

<pre><code class="python" style="font-size:15px">실행결과

Epoch: 0001 | cost = 2.881752090

...

Epoch: 0020 | cost = 0.412588274

---------------------------------------------------------------------

Learning finished

Accuracy : 0.894

</code></pre>

## MNIST Neural Network 적용한 모델 소스코드

____

<pre><code class="python" style="font-size:15px">import tensorflow as tf

import random

import matplotlib.pyplot as plt

from tensorflow.examples.tutorials.mnist import input_data

# one_hot encoding 옵션

mnist = input_data.read_data_sets("MNIST_data/", one_hot=True)

# 0~9까지 숫자 10개

nb_classes = 10

X = tf.placeholder(tf.float32, [None, 784])

Y = tf.placeholder(tf.float32, [None, nb_classes])

# Neural Network 구성

W1 = tf.Variable(tf.random_normal([784, 256]))

b1 = tf.Variable(tf.random_normal([256]))

L1 = tf.sigmoid(tf.matmul(X, W1) + b1)

W2 = tf.Variable(tf.random_normal([256, 256]))

b2 = tf.Variable(tf.random_normal([256]))

L2 = tf.sigmoid(tf.matmul(L1, W2) + b2)

W3 = tf.Variable(tf.random_normal([256, nb_classes]))

b3 = tf.Variable(tf.random_normal([nb_classes]))

hypothesis = tf.nn.softmax(tf.matmul(L2, W3) + b3)

cost = tf.reduce_mean(-tf.reduce_sum(Y * tf.log(hypothesis), axis=1))

optimizer = tf.train.GradientDescentOptimizer(learning_rate=0.1).minimize(cost)

is_correct = tf.equal(tf.arg_max(hypothesis, 1), tf.arg_max(Y, 1))

accuracy = tf.reduce_mean(tf.cast(is_correct, tf.float32))

training_epochs = 20 # 전체 데이터셋 학습시킬 횟수

batch_size = 100 # 한번에 실행시킬 데이터 갯수

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

for epoch in range(training_epochs):

avg_cost = 0

total_batch = int(mnist.train.num_examples / batch_size)

for i in range(total_batch):

batch_xs, batch_ys = mnist.train.next_batch(batch_size)

c, _ = sess.run([cost, optimizer], feed_dict={X:batch_xs, Y:batch_ys})

avg_cost += c / total_batch

print('Epoch:', '%04d' % (epoch + 1), '| cost =', '{:.9f}'.format(avg_cost))

print("---------------------------------------------------------------------")

print("Learning finished")

print("Accuracy : ", accuracy.eval(session=sess, feed_dict={X: mnist.test.images, Y: mnist.test.labels}))

for epoch in range(training_epochs):

r = random.randint(0, mnist.test.num_examples - 1)

# print("Test Random Label: ", sess.run(tf.argmax(mnist.test.labels[r:r + 1], 1)))

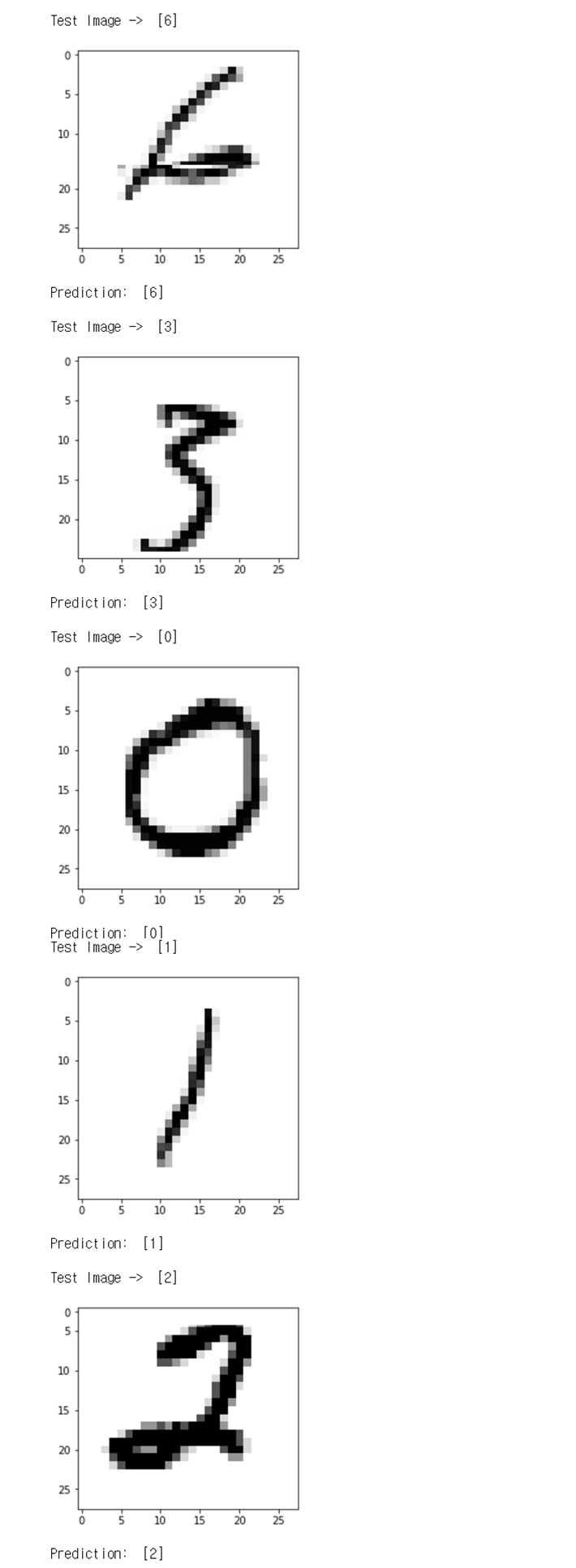

print("\nTest Image -> ", sess.run(tf.argmax(mnist.test.labels[r:r + 1], 1)))

plt.imshow(mnist.test.images[r:r + 1].reshape(28, 28), cmap='Greys', interpolation='nearest')

plt.show()

print("Prediction: ", sess.run(tf.argmax(hypothesis, 1), feed_dict={X: mnist.test.images[r:r + 1]}))

</code></pre>

<pre><code class="python" style="font-size:15px">MNIST NN 실행결과

Epoch: 0001 | cost = 1.838566279

...

Epoch: 0020 | cost = 0.191526052

---------------------------------------------------------------------

Learning finished

Accuracy : 0.9128

</code></pre>

- MNIST 데이터셋을 활용해 손글씨 숫자 이미지를 학습 시켜, 테스트 이미지를 입력했을때 예측하는 모델을 구현해보았다.

- 실행결과 Neural Network를 구성한 학습 모델의 정확도가 조금 더 높았다.

- Input, Output layer의 neuron 갯수, epoch 횟수, layer를 여러 층 구성한 hypothesis에 따라 정확도가 달라지는 것을 확인할 수 있었다.

> 소스코드 - 모두를 위한 머신러닝 김석훈 교수님 강의 참고 : ([https://hunkim.github.io/ml/](https://hunkim.github.io/ml/))

'Deep Learning' 카테고리의 다른 글

| TensorFlow - RNN 기초 (0) | 2017.08.25 |

|---|---|

| TensorFlow - MNIST ReLU, Xavier 초기화, Dropout 적용하여 정확도 높이기 (0) | 2017.08.15 |

| TensorFlow - Neural Network XOR 문제 (4) | 2017.08.03 |

| TensorFlow - Learning Rate, Data Preprocessing, Overfitting (0) | 2017.08.01 |

| TensorFlow - Softmax Regression(2) (0) | 2017.07.13 |